He’s actually incredibly average looking as a half Asian…

Looks like a baddie in a 1970s kung fu movie.

I can’t exactly place what is different with those images and actual Brad Pitt. I mean some of them are clearly recognizable as Brad Pitt. Is it just the eyes? It’s messing with my mind.

I think mostly the eyes, but also nose is wider and nostrils more flared. And obviously hair color.

Enjoy the little things, for one day you may look back and realize they were the big things. — Robert Brault

During training most of the model is given an image and text description pair. The model looks at an text input, converts it to a vector representing this input that supposedly captures the meaning of your text, then it uses that vector to try and generate an image. It will either compare that image with the original image in the input data pair and tweak the model to get it to generate an image as close to the original as possible, or use another model to determine if it is an real image, or one generated by a model.

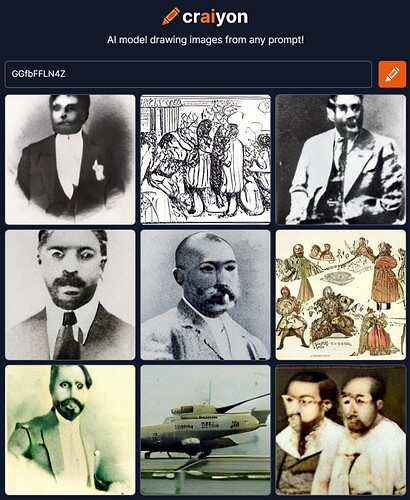

For the vectors, you can literally enter random numbers and it would still generate an image. That’s essentially what’s happening when you enter a random string.

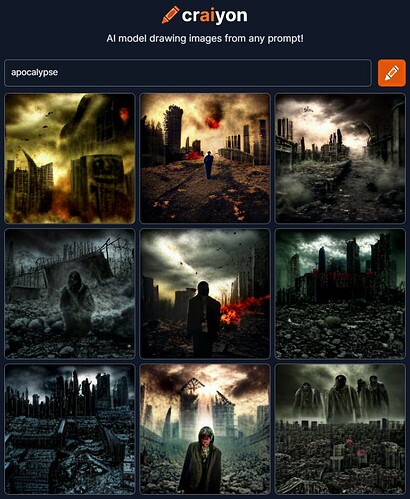

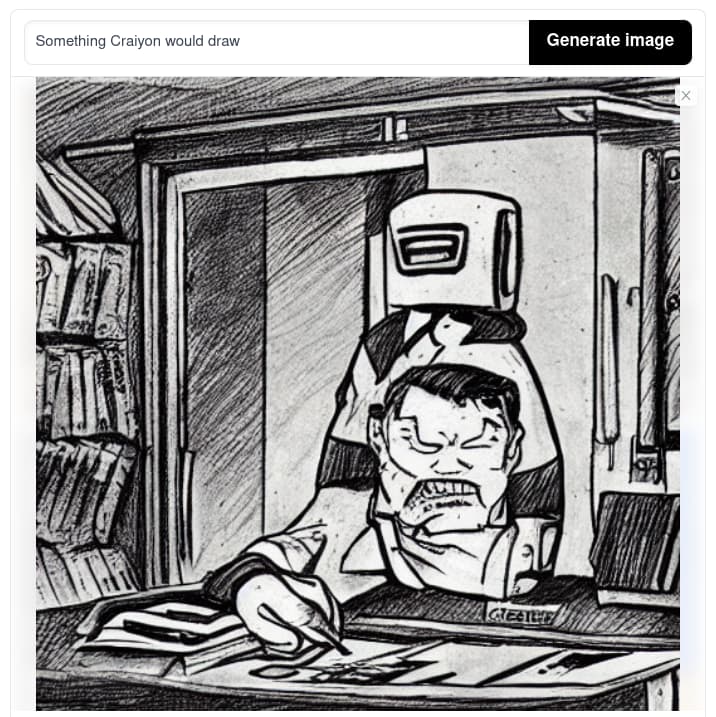

Yes - the question the article raises is basically just how these networks react to “non-traditional” input (e.g. drawing the opposite of something). Although one might expect random images to be created, this is actually not the case.

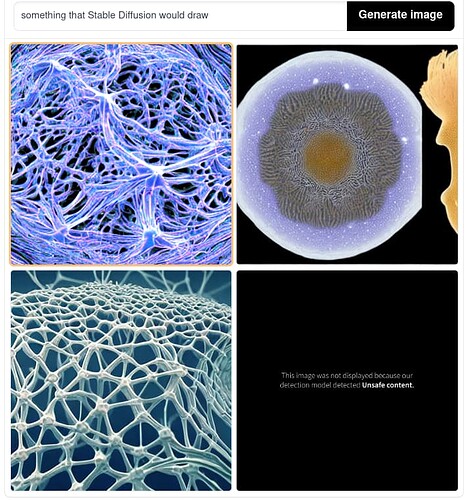

Craiyon doesn’t seem to be able to handle negation that well “not a flower” will also produce flower pictures. However, I still find it interesting how it reacts to “gibberish” as an input.

It isn’t random because the model looks at the high dimensional feature vector generated from your text input, and see how close each feature is to the rest of the training data. Since each text would only have one feature vector generated by the model, each image generated by the model, usually with random perturbation added to generate different images, would largely stay on the same theme, since the feature vector is closest in high dimensional space to that theme.

So the feature vector generated from GGfbFFLN4Z obviously corresponds to some type of portrait in high dimensional space, that’s why every image generated with that vector would be some kind of portrait.

Now I want to know what that unsafe content is…

Popular art topic!

Benecio del Toro