Not at all what’s happening. The current break through in Artificial Neural Network based AI is not about programing the desired behavior. Instead, it’s about constructing a network structure and defining a learning loss, then feed millions of lines of conversation data into it, and hope for a desired result.

It essentially is no different from how we first learned language, by listening and trial and error to see if what we thought we learned is correct. It’s just that GPU/TPU servers can teach a model to comprehend and express itself at a level that practically passes the Turing test in a matter of days if not hours, instead of years like us flimsy meatbags.

I just saw an interview with this guy and he looks exactly like the type to believe a computer program is his new friend.

If you read the chat, it is very good, but the thing is, you can replace “sentient” with anything, let’s say “frog”, and it will be just as good. Ask the bot if it’s a frog, ask it how we know that it’s actually a frog and not just a bot that is good convincing us it’s a frog, etc etc, and it will tell you all about frog things in the same way.

Again, impressive to someone who doesn’t know how this works.

The reasoning here is circular. If what the bot tells us sounds like what a human would say, then humans have already said it in numerous articles and such. Which the bot is trained on. So it’s just repeating back to us what we have already said a sentient AI would say. Doesn’t even have to be drawing from content that specific. With all of the information humans have written and fed to it, it of course can respond in a realistic way.

I don’t know what a real test for consciousness could be. I don’t think there is one. How do you know that anyone in life is actually a conscious being? You might say that people who are psychopaths are people who don’t assume consciousness in others.

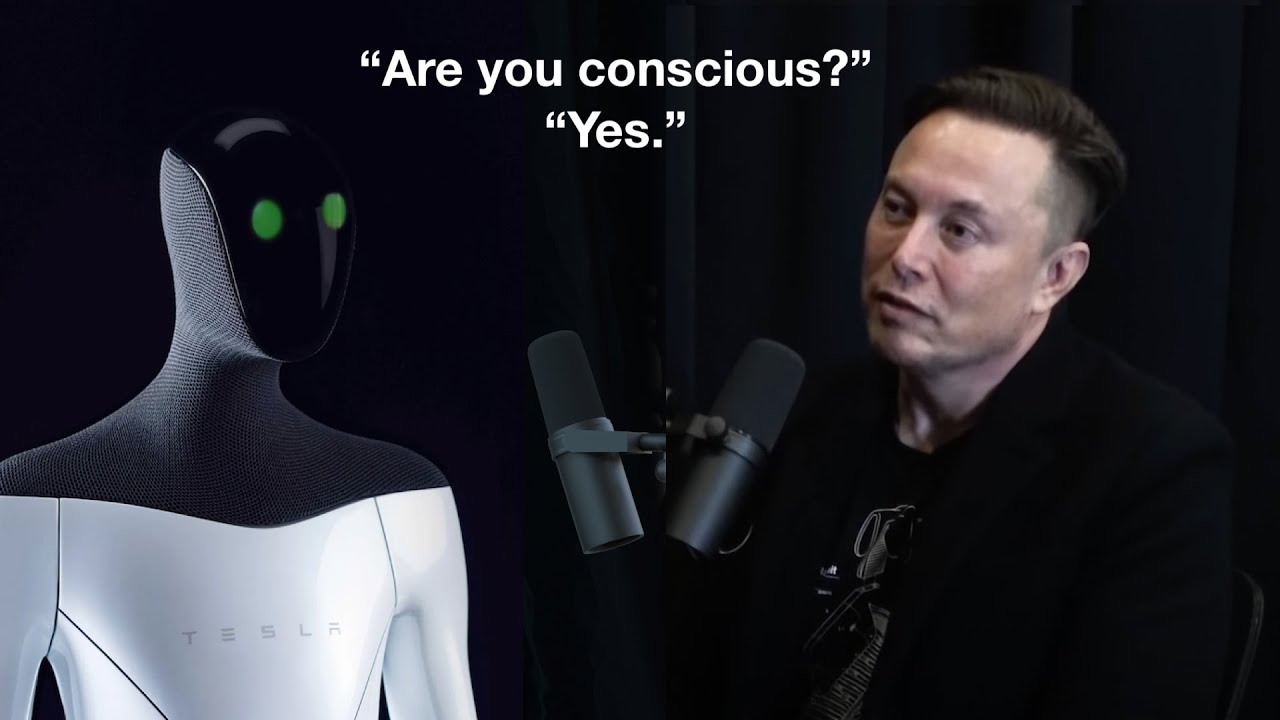

The issue is previously we’ve defined sentience with the Turing test, and cutting edge NLP models obviously have achieved passing the Turing test.

Imagine when such NLP models are deployed everywhere, not just with texts, but also coupled with convincing voice generation models when the task requires speech, and you can’t tell if it’s a person or a model behind the screen or over the phone, wouldn’t you treat it as if it’s an actual person first, just in case it actually is a person?

And if you already treat it like a person and can’t tell the difference, why would you change how you interact with it just because you saw that its appearance is not biological?

Like I’ve said before, every single biological person also learned language by trying to sound like what other humans have already said. Transformer based NLP and image models are fully capable of creating brand new articles, music, poems and even paintings, photos, movies, based on what it has seen or read before after internalizing the data, but how are we different?

What makes us different is emotions. The only reason we don’t treat other humans like shit for our own personal gain is because we know it’ll affect them emotionally, either immediately or over time.

It’s why we have no issue turning off a computer but do in killing an animal.

And why psychopaths / people lacking in emotion, can treat others as if they’re not real people.

Well, also another difference – for a sentient creature, it is living its life and thinking and feeling when when no one else is interacting with it. A computer is only active in response to a human’s interaction/instructions. A bot, with no instructions to run, but still powered on, ceases to exist in any real sense.

I think what we might see is law that if you ask an AI if it’s an AI, it has to tell you the truth.

Maybe the best way to tell is that it never loses patience, but that would be a good advantage.

Plenty of studies teach models to recognize and express emotions.

I would say that perhaps with most people, we are born able to express emotions, we just have to learn how to control and express them in socially acceptable ways. While with a model, you would have to teach it to express emotions. Although, I doubt being born with emotions should be a criteria for sentience. There probably are people unable to express emotions but should be considered as sentient nevertheless.

Not comparable. It would only be comparable if you leave a constant input to the model on at all times. Otherwise, it’d be essentially the same as disabling every senses of a person, and then criticize it for having no output without outside stimuli.

A person with no senses would still have inner thought. You just might not see it. You can test for brain-dead-edness. An idle computer would be like a brain-dead person.

I think sentience would require an ability to have meaningful existence outside of any input.

This is why though we can’t be sure we’re not, individually, the only sentient person in the world. We can’t ever be sure that other people have inner thoughts, and aren’t just some simulation.

We assume people are like us, because they’re similar and convincing. But I don’t think you can test for it. So we definitely can’t test a computer for it.

I think another thing would be separating knowledge from sentience. Humans are born without knowledge, but we would still say they’re sentient at birth, no? So if we build a sentient AI, can we rebuild a copy of it that has the same physical structure and initial pre-trained state, with no specific knowledge, and it be able to demonstrate something meaningful.

Although babies are basically useless at birth, so hmm. In any case you have to separate what it knows from how it thinks, to be sure that it is having true thought and not just parroting information.

Perhaps we should define sentient as being able to think and reason which AI can do

But only biological living mammals as

having Souls

I think most people would rather cease to exist if they are devoid of any sensory input.

I wouldn’t say that AIs can either think or reason. The reasoning was done by humans that programmed it, the output of an AI is just fitting of a statistical model, with no independent logic behind it.

I would say no one / nothing has a soul.

Yeah, but they’re not mutually exclusive. Our existence is on top of our input/output, not dependent on it. An AI’s existence is dependent on it.

Are you saying it’s the model structure that does the reasoning? I would say it’s the features extracted from training data using well defined losses that does the reasoning.

Yeah, I wouldn’t say there’s any reasoning done beyond the initial programming. It just follows commands, without discretion. And if you don’t provide some sort of randomization seed, it’ll produce the same result over and over, even for a task that is “creative” in nature.

What commands? After defining the structure the model does nothing. If you change the loss defined, or the learning rate, or any small thing, the result changes. After the training stage, the only command would be “check out this input”.

If you freeze the weights after you are satisfied with the current epoch of training results, then yeah. However, there are continual learning/always on models that would update its weights with each new input. Then it would not keep spitting back the same results.

Also, what makes you think we don’t have something akin to a randomized seeds in our own neural network?

You got me there on those points.

All I’m saying is, once you’ve passed the Turing test, it is incredible difficult to find a definition that would limit sentience to just human beings.

I would say it’s not so much that it’s hard to find a definition limited to humans, just that it’s hard to find a test for sentience in general. Whether for humans or other.

I think I’ve already mentioned some things that are true for sentient beings that aren’t true for others.